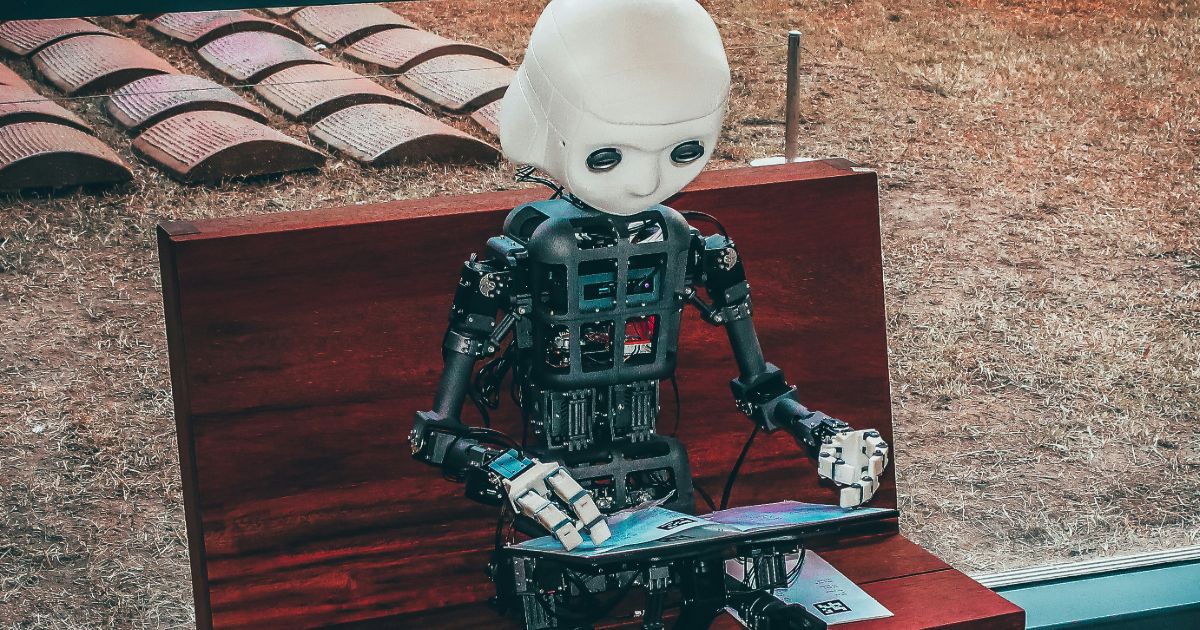

Photo by Andrea De Santis on Unsplash

AI-Assisted Research: Vetting Machine-Generated Insights

Topics in this article:

AI is getting faster, flashier—and more opinionated. With just a few clicks, modern research tools can now generate themes, patterns, even full-blown research summaries. Sounds like a dream, right? But in UX research, where trust, nuance, and rigor matter most, this new wave raises a real question: Can you move fast and still get it right? Welcome to the world of AI-assisted UX research synthesis—where speed is impressive, but human judgment is still non-negotiable. This article explores how to leverage AI tools without letting them compromise your insights.

What Is AI-Assisted UX Research Synthesis?

Let’s break it down. AI-assisted UX research synthesis refers to the use of generative AI to help analyze qualitative data—like interview transcripts, usability feedback, or survey comments.

AI can:

- Identify patterns or repeated phrases

- Summarize large volumes of text

- Suggest themes

- Generate rough first-draft insights

What it can’t do reliably (yet) is:

- Understand sarcasm, hesitation, or body language

- Weigh strategic context

- Spot emotional nuance or outlier impact

- Prioritize one insight over another based on business or user value

That’s where you—the researcher, strategist, or designer—come in.

The Risk: False Confidence in Machine-Generated Insights

The problem isn’t that AI is wrong. It’s that it sounds right. Machine-generated summaries are often clean, well-worded, and dangerously vague. They feel like truth—but may rest on shallow or misinterpreted signals.

Signs you might be over-trusting AI:

- You use a theme without checking the original quotes

- You can’t trace an insight back to its source

- Multiple themes feel generic (“users want a better experience”)

- You’re not sure what data the summary actually covered

- You’ve skipped your normal synthesis process entirely

AI can help you start your synthesis. But it should never replace it.

When to Use AI—and When to Step In

Here’s how to think about AI as a co-pilot, not a lead researcher.

Use AI for:

- Early clustering of qualitative feedback

- Getting a fast overview of large datasets

- Drafting affinity groups or quote summaries

- Generating working hypotheses you can test

- Spotting potential duplicates in large research repositories

Don’t rely on AI for:

- Final wording of insights

- Thematic prioritization

- Synthesizing non-verbal cues

- Interpreting emotion or uncertainty

- Translating nuance across user segments

Think of it this way: AI helps you clear the table. It doesn’t set the strategy.

How to Vet AI-Generated Research Summaries

Want to keep your research rigorous and efficient? Use this human-in-the-loop checklist:

- Traceability check: Can every insight be tied to real quotes or evidence?

- Language precision: Is the AI using vague generalizations (“users like X”) or actual behavior-based phrasing?

- Theme validation: Do the AI-generated themes reflect true variation across users, or just repetition?

- Contradiction spotting: Does the summary skip over conflict, confusion, or disagreement between users?

- Strategic alignment: Are the AI’s insights aligned with your goals, or are they just patterns without context?

Tools to Watch—and How to Use Them Wisely

Many modern UX tools now include AI-powered synthesis features. A few examples:

- Dovetail: Can auto-generate themes and tag transcripts

- Condens: Offers AI-suggested codes and summaries

- Notably: Uses AI to suggest insights and themes from research notes

- Aurelius: Includes AI to support organizing and synthesizing insights

How to use them wisely:

- Don’t treat the first result as final

- Always read the source quotes yourself

- Customize themes based on your project’s strategic context

- Document when and how AI was used in your synthesis process

Rethinking Rigor in the Age of AI

Here’s the twist: using AI can support rigor—when done intentionally.

You gain time to:

- Go deeper into the data that matters

- Focus on storytelling, not just sorting

- Spot ethical red flags earlier

- Test more hypotheses with better clarity

Rigor isn’t about doing everything manually. It’s about doing it deliberately.

You’re Still the Researcher

AI tools are fast, impressive, and improving every day. But they’re not strategic thinkers. They don’t know your users. And they don’t know what your team is trying to build.

You do.

So let the machine help you move faster. But don’t let it do your thinking for you. Because insight—real, strategic, user-centered insight—isn’t generated. It’s earned.

Get a love note

Get the latest UX insights, research, and industry news delivered to your inbox.

advertisement